artificial intelligence, virtual reality, education

Virtual Reality Methods and Systems. Brown U.

I am passionate with technologies that help think and focus. I want to use virtual reality and artificial intelligence to expand human cognition. Currently, I am also working with an educational venture on helping kids learn better.

At Brown, in parallel with my own research, I developed some of the core components of the VENLab software and lead its development team. From the drivers to the 3D experience, I coded most of the VR headset system that is now both nationally and internationally patented. This wireless VR system enabled back then anybody anywhere in the world to walk in shared simulations, see and interact with each others in real-time using body tracking and reconstruction. This experience transformed my academic focus and propelled me into the exciting challenges of entrepreneurship.

Creative Smarts Inc. 2015-now

Big Hatch Inc. 2014-2015

I was lucky to achieve a rich academic journey in artificial intelligence. I studied many approaches, like complex system modeling, agent simulation, or machine learning. I worked in and with some of the best labs in the world on some of the most sophisticated systems, such as virtual reality headsets and robots. I learned to solve problems and became fond of the creative process. And, I was so thrilled to build innovative tools to help people understand or do something better.

I studied and designed artificial agents with a multidisciplinary approach in computer science, artificial intelligence, and cognitive science. I was particularly interested in perception-action systems that exhibit stable, yet flexible behavioral patterns, adapting to chaotic and dynamic environments while maintaining a goal-directed behavior. Understanding ubiquitous and apparently elementary behaviors, such as locomotion/navigation, can lead to more fundamental questions on human behavior and cognition.

Post doctorate fellow, Serre’s lab, Brown University 2012-2013

Post doctorate fellow, VENLab Brown University 2009-2012

Ph.D. artificial intelligence 2008

|

The presentation of my projects reflects my interests. Please refer to the PI's communications, publications, or lab's webpages of these projects for more information. |

|

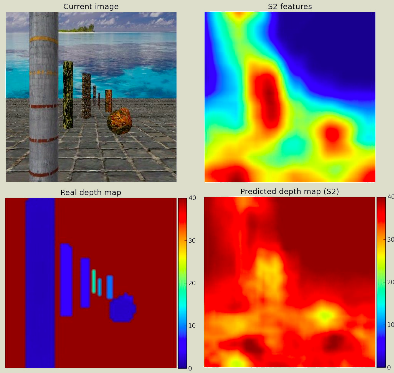

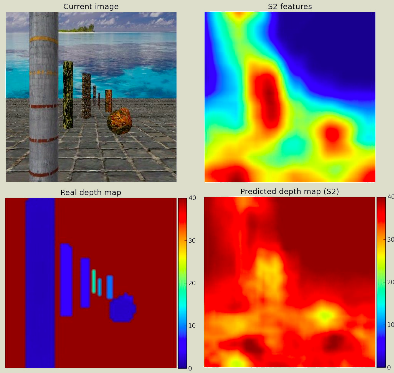

Agent / Perception-Action ArchitectureThe aim of this project was to build a biologically-inspired vision system for the visual control of online steering in complex environments [Bonneaud et al. 2013]. We propose to use the mathematical psychology model of locomotor dynamics developed in the VENLab [Warren and Fajen 2008] coupled to the neurally plausible computational model developed in Serre's lab [Serre et al. 2007]. The computational vision model processes the visual information (simulated optic flow and stereo vision) to extract information on the environment for the locomotion model to steer the agent towards its goals while interacting with the other objects of the environment (e.g. avoid obstacles, walk along walls, or form groups). Top figure shows four sub pictures: top-left subfigure is a first-person perspective of a virtual scene with different types of objects, and the top-right is a heat map showing the motion energy extracted by the vision model (S2 layers); bottom-left shows the ground-truth depth map and bottom-right the approximated depth map based on the motion energy. The bottom figure is a snapshot of a virtual world in which we run our agent using both the vision and the locomotion models. This work is supported by the ONR grant #N000141110743 and Robert J. and Nancy D. Carney Fund for Scientific Innovation. Collaborators: Pr. Thomas Serre, Youssef Barhomi (Serre's Lab, Brown Univ.); Pr. William H. Warren (VENLab, Brown Univ.).

|

|

|

|

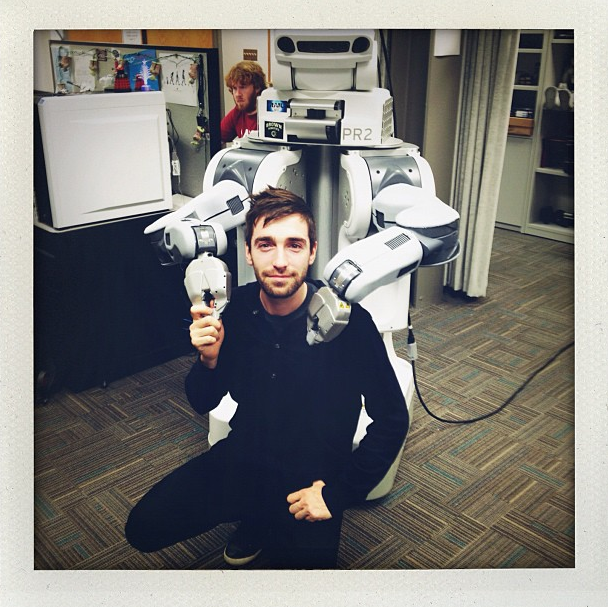

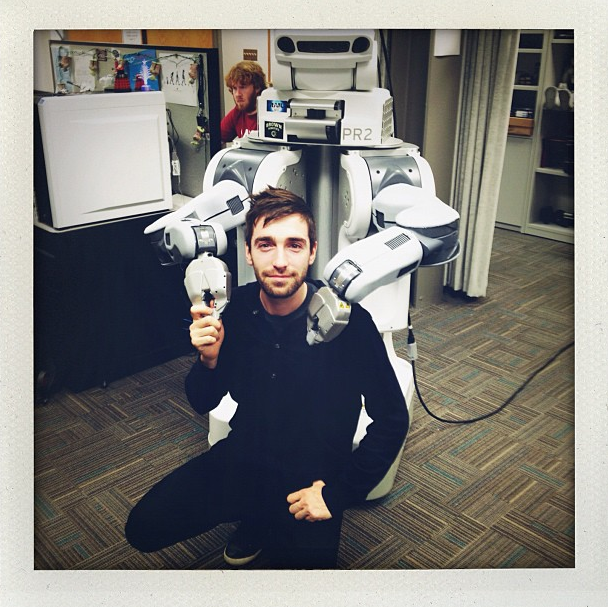

Human / Robot locomotionThe goal of this project is to port our perception-action model accounting for human locomotion to robotic platforms. To do that, we borrowed two turtlebots from Brown's RLab (P.I. Chad Jenkins) and have had access to their PR-2 robot. We want to show here that our model can be ported to ROS (Willow Garage) and that we can achieve human-like trajectories in space with a robot following a human. Figure shows me confronted for the first time to the massive PR-2 of the RLab (Brown Univ.). Thanks to the WPI-RAIL lab members who let us visit them, as the PR-2 was at that time in their hands. This work is supported by the ONR grant #N000141110743 and Robert J. and Nancy D. Carney Fund for Scientific Innovation. Collaborators: Pr. Thomas Serre and Youssef Barhomi (Serre Lab, Brown Univ.); Pr. Chad Jenkins (RLab, Brown Univ.). |

|

|

|

The presentation of my projects reflects my interests. Please refer to the PI's communications, publications, or lab's webpages of these projects for more information. |

|

Crowd / Agent InteractionsIn this project, we try to model individual locomotor behavior in complex locomotor scenarios and self-organized behavior in crowds. I work on developing the dynamic model for online steering that is being developed in the VENLab [Warren 2008]. The model simulates the observed human paths, and in the case of obstacle avoidance, the routes selected around the obstacle. A current challenge is to scale the model to account for the dynamics of groups and crowds. Locomotion is here a case study to investigate the coupling of a perception-action system with its environment. Based on the dynamics of perception and action [Warren 2006, Kelso 1995, Beer 2000], we inquire how behavior can emerge as a stable solution of the system's dynamics (self-organization), which we call the behavioral dynamics [Warren 2006]. In crowds, the collective behavior also emerges from the interactions in the system agent(s)-environment, with the self-organizing pedestrians determining the group and the group partially enslaving the dynamics of the pedestrians. Top figure shows 2 groups of 10 artificial agents walking towards each other. Middle figure shows artificial agents walking in a virtual corridor. Bottom figure shows a swarm of artificial agents. Snapshots from the locomotion multi-agent simulator, VENLab. This work is supported by the NIH grant R01 EY010923. Collaborators: Pr. William H. Warren, Kevin Rio, and Dr. Adam Kiefer (VENLab, Brown U.); Pr. Pierre Chevaillier (CERV/LISyC, France).

|

|

|

|

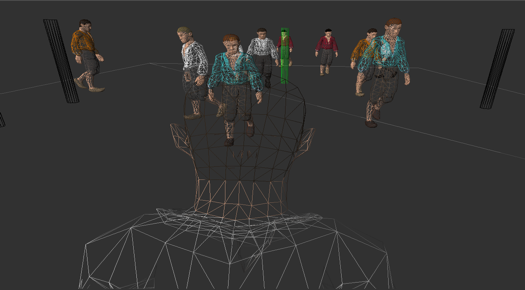

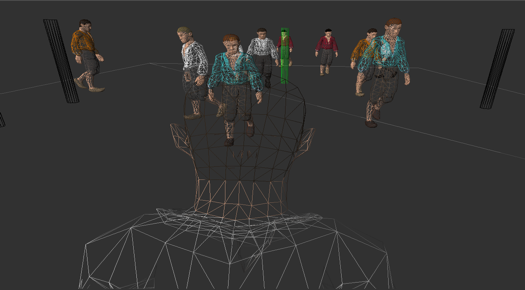

Agent Modeling and Multi-agent SimulationI built this project to provide a relevant simulation framework for running virtual experiments with many agents in the VENLab's VR equipment. This simulation platform provides the lab with a multi-agent simulator to easily prototype new scenarios of locomotor dynamics using configuration files. The simulator supports real-time rendering simulations of navigating agents in virtual environments. A GUI enables to quickly design new scenarios based on the existing agent behavior library for multi-agent simulation in complex environments. The simulator also provides a modular computational framework for developers to easily add new behaviors for agents. The software relies on C++, POSIX, OpenGL, and X11/Win32 (cross-platform), and is based on the AReVi library. |

|

|

2013

Towards a biologically-inspired vision system for the control of locomotion in complex environments.

VSS 2013 Conference, Naples FL, May 2013.

Quantifying the Coherence of Pedestrian Groups.

35th Annual Conference of the Cognitive Science Society (IN PRESS).

2012

A behavioral dynamics approach to modeling realistic pedestrian behavior.

In the Proceedings of the PED'2012, the Int. Conference on Pedestrian and Evacuation Dynamics, Switzerland, June 2012.

Speed coordination in pedestrian groups : Linking individual locomotion with crowd behavior.

Journal of Vision 12(9).

Modélisation multi-agents de la locomotion collective de groupes de piétons.

In the Proceedings of the JFSMA'2012, Journées Francophones des Systèmes Multi-Agents, France, October 2012.

2011

Accounting for patterns of collective behavior in crowd locomotor dynamics for realistic simulations.

In the journal LNCS Transactions on Edutainment (Springer).

2010

Analyse expérimentale des biais dans les simulations à base de populations d'agents (Experimental analysis of biases in the simulations of agents populations).

Revue d’Intelligence Artificielle, 2010, 24(5), 601-624.

2009

In virtuo experiments based on the multi-interaction system framework : the RéISCOP meta-model.

CMES : Computer Modeling in Engineering & Sciences, 2009, 47, 299-330.

Experimental Study of Agent Population Models with a Specific Attention to the Discretization Biases.

In the proceedings of the European Simulation and Modelling Conference ESM’09, UK, October, 2009, 323-331.

Data consistency in distributed virtual reality simulations applied to biology.

In the proceedings of the ICAS'09, The 5th Int. Conference on Autonomic and Autonomous Systems, Spain, April 20-25 2009.

Biais computationnels dans les modèles de peuplements d’agents.

In the proceedings of the Journées Francophones sur les Systèmes Multi-Agents JFSMA'09, France, 2009, 145-154.

2007

Oriented pattern agent-based multi-modeling of exploited ecosystems.

In the proceedings of the 6th EUROSIM congress on modelling and simulation, Ljubljana, Slovenia, september 9-13, 2007, 7 pages.

A model of fish population dynamics based on spatially explicit trophic relationships.

In the proceedings of the ECEM'07, The 6th European Conference on Ecological Modelling, Italy, November 27-30 2007.

Multi-modélisation agent orientée patterns : Application aux écosystèmes exploités.

In the proceedings of the Journées Francophones sur les Systèmes Multi-Agents JFSMA'07, France, 17-19 octobre 2007, 119-128.

*Best Paper Award*.

2005

Toward an Empirical Schema-Based Model of Interaction for Embedded Conversational Agents.

In the proceedings of the Joint Symposium on Virtual Social Agents AISB 2005, UK, april 2005.

Modélisation et extraction de schémas dialogiques dans les traces d’interactions langagières des forges logicielles.

Workshop JSM'05, Journées Sémantique et Modélisation, Paris, march 2005.

2004

A Socio-Cognitive Model for the characterization of schemes of Interaction in Distributed Collectives.

In "Distributed Collective Practice : Building new Directions for Infrastructural Studies", Workshop of the CSCW 2004 conference, Chicago, nov 2004.

Layering Social Interaction Scenarios on Environmental Simulation.

78-88, Joint Workshop on Multi-Agent and Multi-Agent-Based Simulation MABS 2004, New York, USA, 2004.

Throughout my academic and professional experience, I focused on modeling the interactions in the agent-environment and agent-agent systems. Computer scientist, specialized in artificial intelligence and multi-agent simulation, I have a multi-disciplinary training in cognitive science where I found helpful material to model behavior and societies of artificial agents.

In Ph.D., I applied my background in distributed artificial intelligence to the modeling of complex systems, such as large ecosystems with many interacting species in changing environments. I built a society of model-agents for modelers to interact with --build, analyze, and experiment-- their models in an intelligent virtual modeling environment [Bonneaud and Chevaillier 2007] and I implemented it in a multi-model multi-scale simulator.

At Brown, I focused on crowd dynamics and how interacting pedestrians build up collective self-organized phenomena [Bonneaud and Warren 2012]. I developed a multi-agent simulator that researchers now use to prototype human experiments and investigate through simulation various locomotor scenarios. I also designed and conducted experiments with human participants and investigated experimental questions [Bonneaud et al. 2011].

In 2012, I joined Serre Lab to pursue on expliciting the modeling of the visual experience of an artificial pedestrian [Bonneaud et al. 2013]. I investigated the human locomotor perception-action loop with Serre lab's vision model which accounts for the neural mechanisms responsible for extracting visual information from various visual cues coupled to the VENLab's locomotion model. The system was run in artificial worlds ~real time with GPU acceleration. And we trained the large-scale (108 units) neural network vision model on large artificial datasets. We then experimented with the system on robotic platforms using turtlebots and a PR-2.